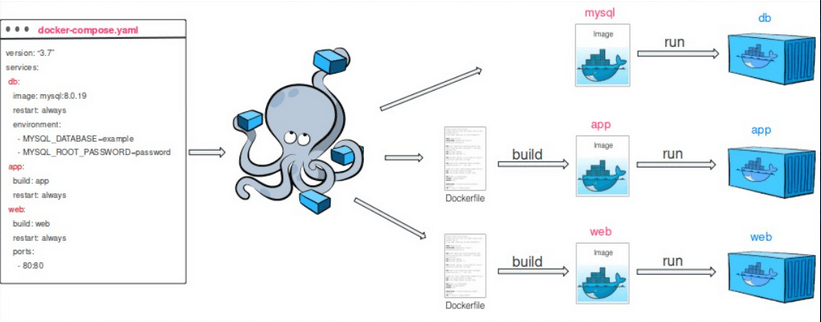

Today we continue the topic of best practices in containerization using Docker software. If you haven't read the previous parts about Docker itself or practices, we provide links to the articles at the bottom of the page..

Best Practices - Continued

There are still many actions you can take to improve the quality and security of your images. One of them may be avoiding running applications in the Dockerfile as the root user. By default, when we write something in the Dockerfile, we execute all commands as an administrator. This is entirely desirable when configuring an image during "build time." Often, installing a package requires administrator privileges. However, before executing the CMD or ENTRYPOINT command in the Dockerfile (commands that will run at runtime), it is a good practice to switch the user to one with limited permissions (principle of least privilege). Some images, such as node, provide a special user with appropriately restricted permissions.

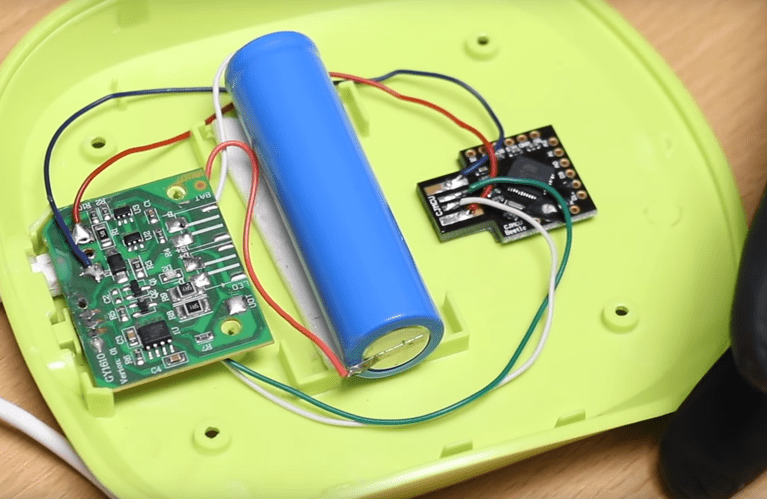

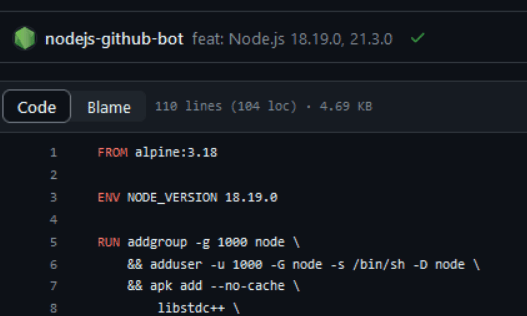

Figure 1 - Dockerfile of the Node base image in version 18, source: GitHub, docker-node

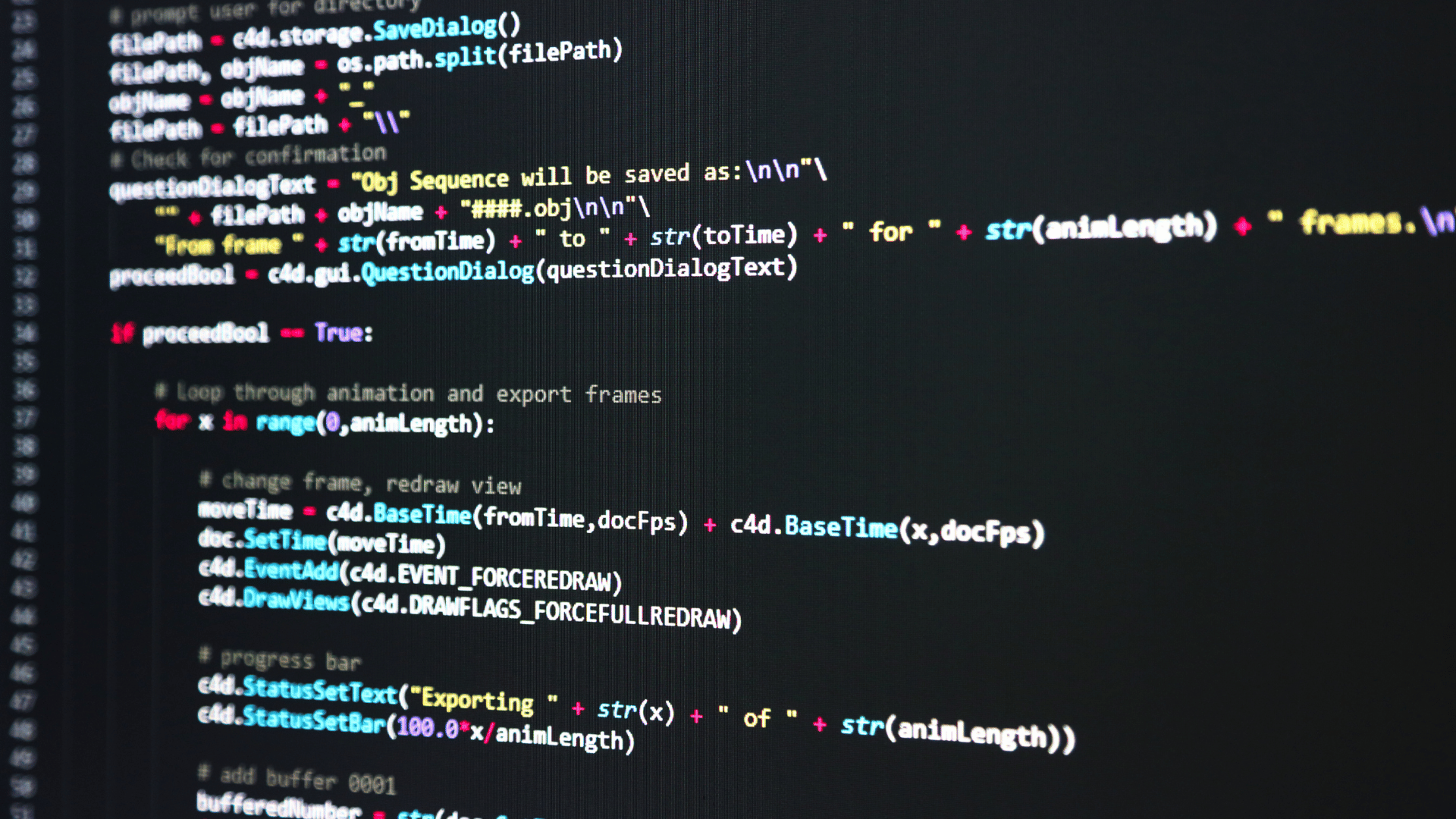

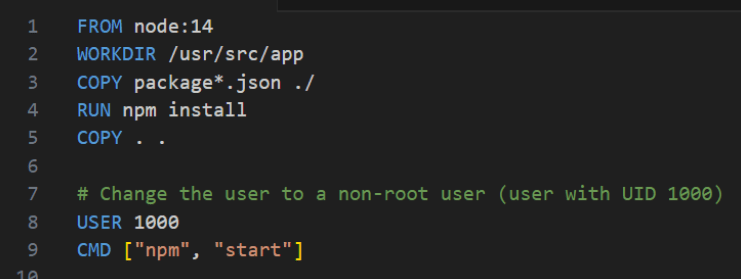

To change the user used in the Dockerfile, the 'USER' command should be employed.

Figure 2 - Changing the user in the Dockerfile

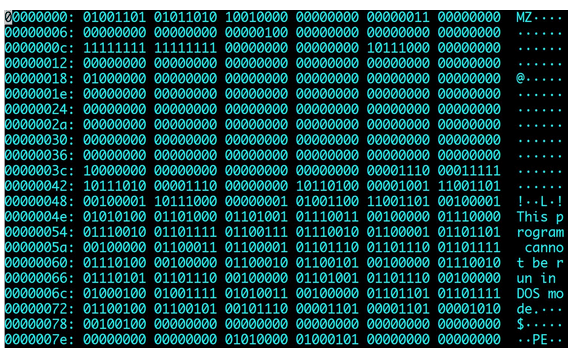

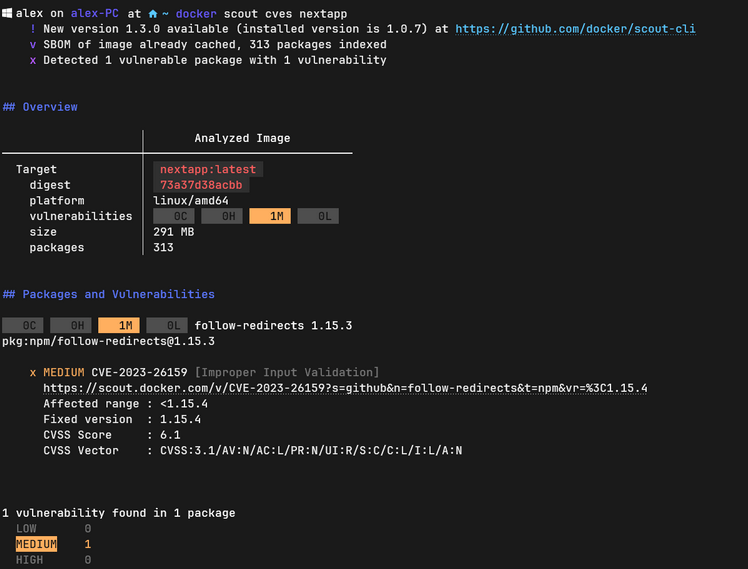

Use a tool for scanning images for vulnerabilities - docker scout - you might have come across a similar tool called docker scan. However, it is outdated. The 'scout' command is used to analyze images for vulnerabilities collected in vulnerability databases. An example is the 'Common Vulnerabilities and Exposures' database, which allows us to assess the severity of vulnerabilities in our image and determine if they pose a direct threat to the data processed within our application.

Figure 3 - Vulnerability scanning using the 'docker scout cves' command"

Such scanning can also be part of the DevOps process (we recommend our previous article on this topic). There are many external scanners such as Trivy that can become part of the CI/CD process, thereby increasing the security of our code. It's also worth mentioning that using an insecure version of an image can impact the security of the host and other containers (e.g., exploit dirtycow).

Another valuable piece of advice to consider when building your own images is to use userns-remap. This is a functionality that involves remapping UID and GID parameters from the host to the container. If we need to start a specific application as root, and it has a volume mounted from the host (bind-mount), the root UID from the host computer is mapped to the same UID in the container by default. In some cases, this can lead to dangerous situations. If a user can run a container on the host and mount a file to which they do not have access, the lack of remapping may grant access to that file despite lacking permissions on the host (the root on the host will be equal to that in the container, due to the UID). However, there is a simple way to address this. Edit the daemon.json file as follows:

{

"userns-remap": "default"

}

and then reset the Docker daemon. We recommend an article by Dreamlab on this topic:

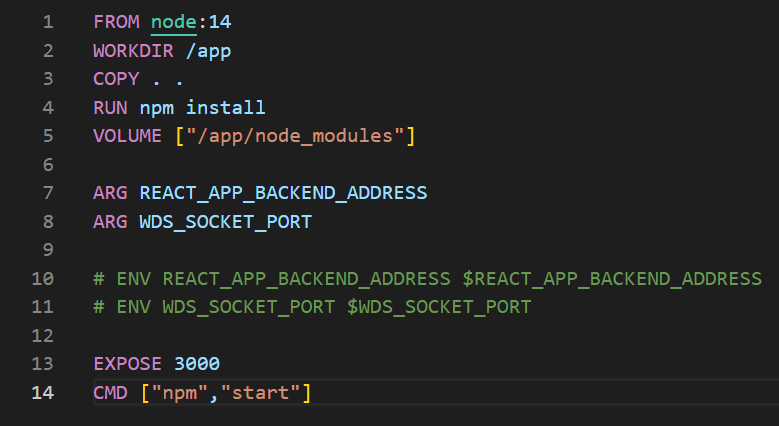

Try to use Docker's cache as efficiently as possible - when writing a Dockerfile, place commands that are immutable for a given image at the top, and those causing frequent changes (such as copying new code with COPY . .) at the bottom of the file. This way, you optimize the use of the cache and ensure that some layers do not need to be rebuilt with every code change.

Do not assume that files placed inside a Docker image are secure - the image is not a good place to store secrets. They should be provided to the container during its runtime by software such as Secrets.

Use the .dockerignore file to ensure that sensitive files are not included in the image - the .dockerignore file is equivalent to .gitignore for Docker. It allows you to specify files that should not be included in the image, such as private keys..

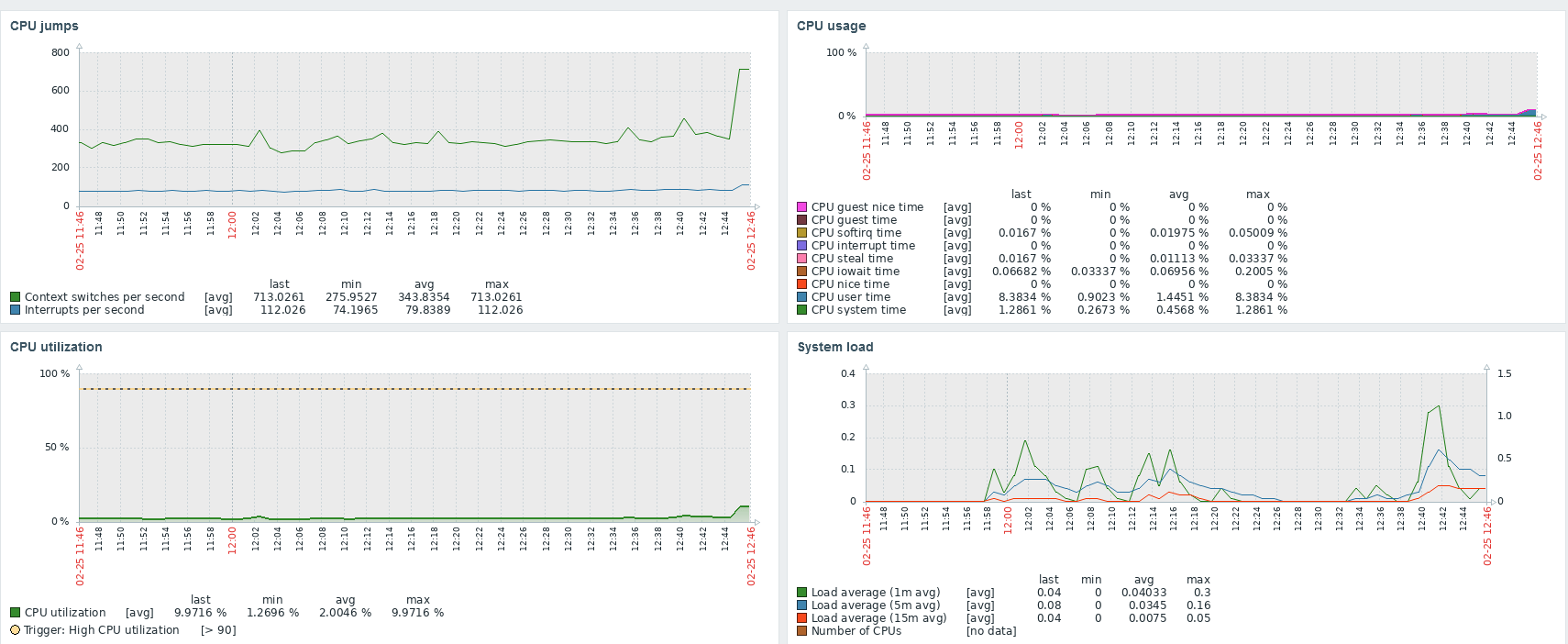

Monitor what happens in the container - monitoring your application is an important security element. This allows you to detect concerning application metrics at an early stage and see if it was influenced by someone attacking your application. In the case of using an orchestrator like Kubernetes, tools such as Prometheus or Grafana are often used to provide IT personnel with information about the containerized system.

Limit the resources that a given container can use - in a production environment where many containers are running, ensure that one of them does not consume all the resources for the rest of the applications running within the cluster. Both Docker and Kubernetes allow manipulation of CPU and operational memory restrictions.

Remember to secure the host system - Linux system hardening is a topic that can be covered in many articles. We will only mention that it is worth making backups of essential files, monitoring server behavior, guarding secrets (SSH or passwords) carefully, and setting permissions correctly in the system. For those interested, we recommend exploring topics such as SELinux or Apparmor.

Have an incident response plan - if a security incident or another incident affecting customers occurs, have a ready-made plan and follow it. In a stressful situation, delaying decisions can negatively impact the entire infrastructure and, consequently, the business. An incident response or infrastructure recovery plan in the event of a failure is essential for running a high-quality service.

Conclusion

We hope that our short series on Docker and best practices contributes to the increased security and quality of your services. If you are hungry for deeper knowledge about container security, we leave you with a few links below for further exploration. See you next week!

Sources:

- https://docs.docker.com/engine/security/userns-remap/

- https://docs.docker.com/scout/quickstart/

- https://blog.aquasec.com/dirty-cow-vulnerability-impact-on-containers

- https://docs.docker.com/config/containers/resource_constraints/

- https://blog.aquasec.com/docker-security-best-practices#Scan-and-Verify

- https://medium.com/@mccode/dont-embed-configuration-or-secrets-in-docker-images-7b2e0f916fdd

- https://www.innokrea.pl/blog/wirtualizacja-konteneryzacja-i-zasady-projektowe-dotyczace-kontenerow-wedlug-redhat/

- https://www.innokrea.pl/blog/docker-konteryzacja-wirtualizacja/

- https://www.youtube.com/watch?v=KINjI1tlo2w&list=PLBf0hzazHTGNv0-GVWZoveC49pIDHEHbn&index=1