Helm for the Second Time – Versioning and Rollbacks for Your Application

We describe how to perform an update and rollback in Helm, how to flexibly overwrite values, and discover what templates are and how they work.

Author:

Author:Today we will tell you about what virtualization actually is, its types, and we will present you with information about good design principles proposed by RedHat in 2017, which concern containers deployed in orchestration environments, including cloud environments. Very similar principles are observed throughout the world of computing, including programming, such as DRY, KISS, or SOLID principles. If you’re interested in reading about clean code, we encourage you to explore our series on Clean Code.

To understand the essence of virtualization in operating systems, we need to explain the fundamental concept related to this subject – hypervisor, also known as a hypermonitor. It is a software component that enables the creation and management of virtual machines (VMs) on a physical machine. The hypervisor resides between the physical hardware and the virtual machines, allowing multiple operating systems to run concurrently on a single physical machine.

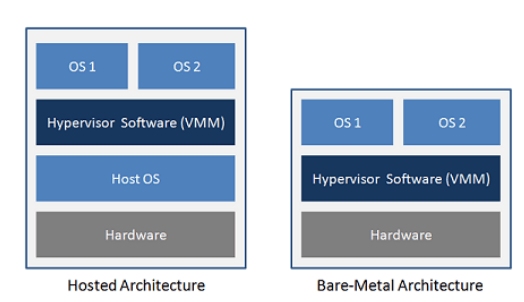

There are two primary types of hypervisors:

Type 1 Hypervisor (Bare Metal Hypervisor) – more commonly found in server solutions, including data centers. It operates directly on the physical hardware, without the need for a hosting operating system. It has direct access to hardware resources. Examples of software that work this way include Microsoft Hyper-V, Citrix XenServer, and VMware ESXi.

Type 2 Hypervisor (Hosted Hypervisor) – more common on user computers. It operates on a hosting operating system (host OS). It relies on the host OS to manage hardware resources and provides an additional layer of abstraction. Examples of software that work this way include VMware Workstation, Oracle VirtualBox, and Parallels Desktop.

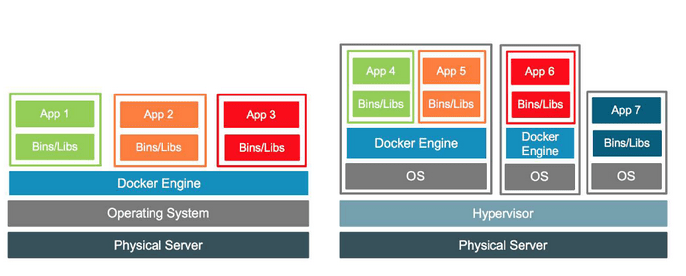

Figure 1 – type 2 architecture vs type 1 architecture, source vgyan.in

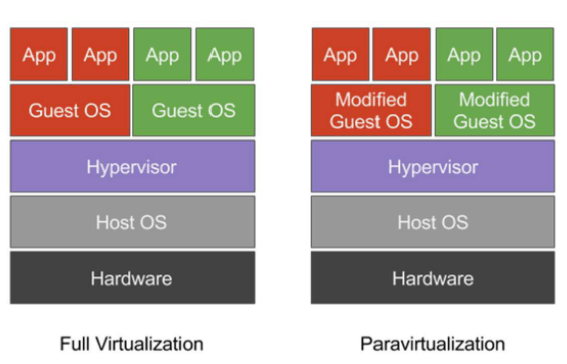

Virtualization is a common technique in computing that involves creating instances of hardware or software on a single physical computer system. In other words, it’s about simulating the existence of logical resources that utilize underlying physical components. The goal of virtualization is to efficiently utilize hardware resources and enable the isolation of different environments and applications, which can lead to improved performance, security, and ease of management. There are different divisions of virtualization, such as paravirtualization and full virtualization.

In the approach of full virtualization, the hypervisor replicates hardware. The main benefit of this method is the ability to run the original operating system without any modifications. During full virtualization, the guest operating system remains completely unaware that it is virtualized. This approach uses techniques like direct execution (less critical commands use the kernel bypassing the hypervisor) and binary translation, which translates commands. As a result, simple CPU instructions are executed directly, while more sensitive CPU instructions are dynamically translated. To improve performance, the hypervisor can store recently translated instructions in a cache.

In paravirtualization, on the other hand, the hypervisor does not simulate the underlying hardware. Instead, it provides a hypercall mechanism. The guest operating system uses these hypercalls to execute sensitive CPU instructions. This technique is not as universal as full virtualization because it requires modifications in the guest operating system, but it provides better performance. It’s worth noting that the guest operating system is aware that it’s virtualized.

Figure 2 – Difference between Virtualization and Paravirtualization

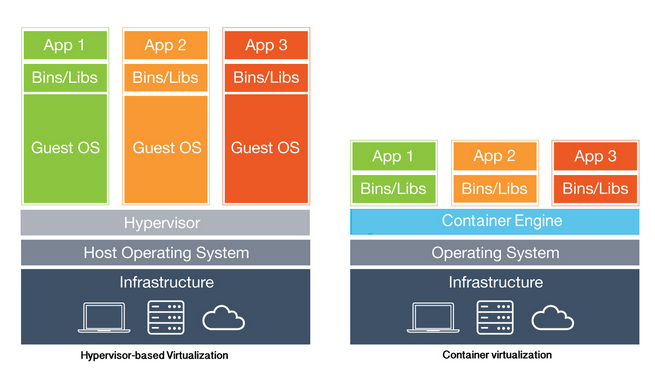

Containerization, on the other hand, is a type of operating system-level virtualization, where containers share the resources of the same operating system while maintaining some level of isolation between them. Compared to other forms of virtualization, containers introduce minimal overhead (due to the shared host system), which means the consumption of additional resources is much lower than, for example, virtual machines. Resources can be allocated and released dynamically by the host operating system. Unfortunately, the isolation of containers is limited compared to classical virtualization solutions.

Figure 3 – Virtualization vs. Containerization, source: blackmagicboxes.com

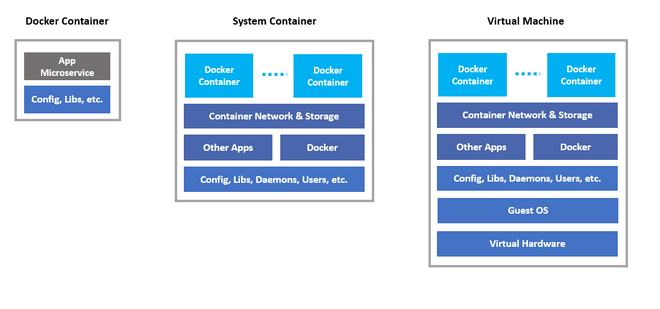

The most popular ways of utilizing containerization technology are:

Figure 4 – Comparison of application container, system container, and virtual machine, source: nestybox

Another interesting solution is nested virtualization. Using system containers or virtual machines, we can introduce an additional layer of abstraction. On the right, you can see an architecture built on bare-metal (without a host OS), three system containers, and a Docker Engine managing the containers.

Figure 5 – Example of containerization and nested virtualization, source: docker.com

In today’s era, applications can be categorized and divided based on various technologies or execution architectures. One of the most distinct and well-known divisions is monolithic architecture versus microservices architecture. The latter is closely associated with containers, where an application is deployed across dozens, even hundreds or thousands of containers (Netflix has over 1000 microservices), managed by an orchestrator and cloud platform tools on which such an application is deployed. These types of applications are often referred to as cloud-native because they were designed with the assumption that they would operate in the cloud. As a result, they anticipate failures, operate, and scale reliably, even when their underlying infrastructure encounters disruptions or outages.

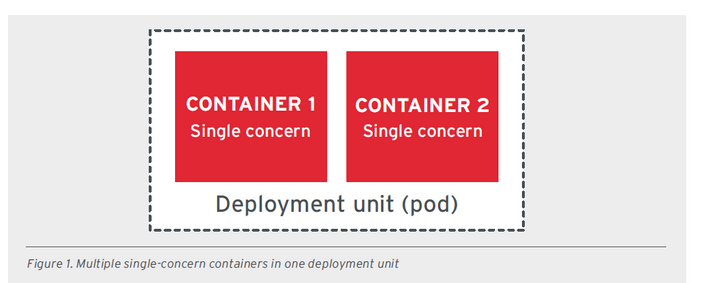

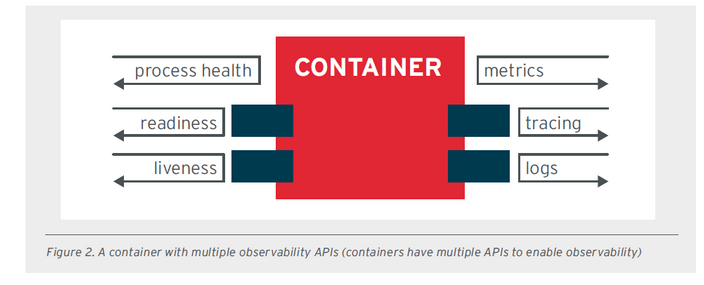

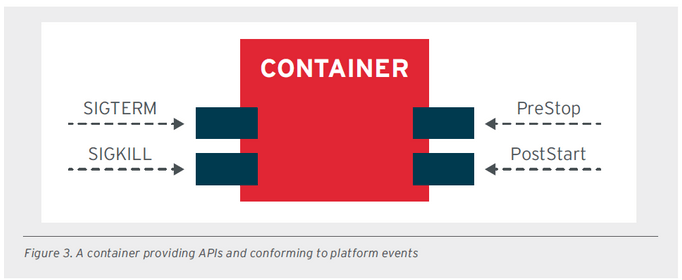

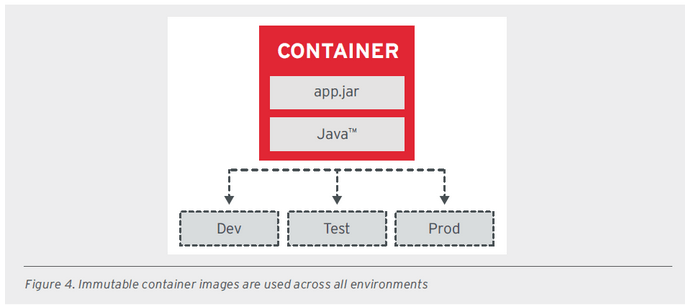

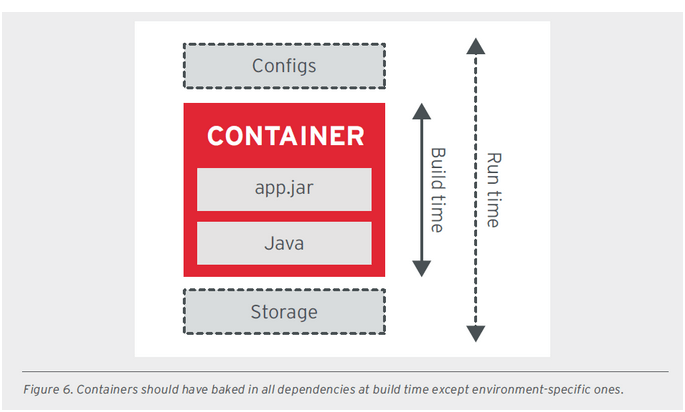

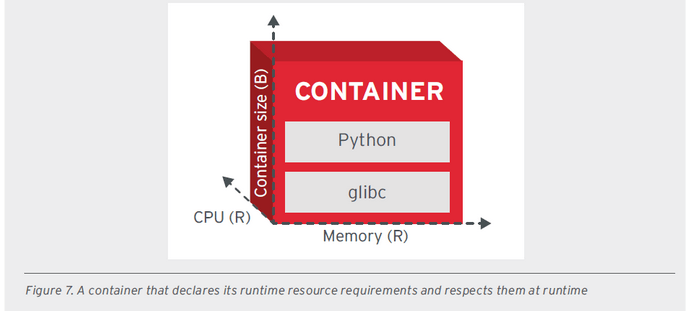

To support cloud-native applications, RedHat proposed principles for application containers in 2017, which can be compared to principles like SOLID in object-oriented programming. The principles are as follows:

All images related to these good principles were extracted from a document provided by RedHat.

https://dzone.com/whitepapers/principles-of-container-based-application-design

We hope that in this comprehensive article, we managed to provide you with insights into containerization and virtualization topics. We also encourage you to explore the links from the sources explaining the concepts discussed in a more detailed manner. If you’re interested in containerization, we recommend our series of articles about Docker.

Sources:

Helm for the Second Time – Versioning and Rollbacks for Your Application

We describe how to perform an update and rollback in Helm, how to flexibly overwrite values, and discover what templates are and how they work.

AdministrationInnovation

Helm – How to Simplify Kubernetes Management?

It's worth knowing! What is Helm, how to use it, and how does it make using a Kubernetes cluster easier?

AdministrationInnovation

INNOKREA at Greentech Festival 2025® – how we won the green heart of Berlin

What does the future hold for green technologies, and how does our platform fit into the concept of recommerce? We report on our participation in the Greentech Festival in Berlin – see what we brought back from this inspiring event!

EventsGreen IT